The Bus to the Peak on the Hong Kong Island

1 #define CT_ASSERT(cond, msg) \

2 typedef char msg[(cond) ? 1 : -1]

3

4 int

5 main(void)

6 {

7 int i = 10;

8

9 CT_ASSERT(sizeof(i) == 4, size_must_be_4);

10

11 }

Blogosphere (alternate: blogsphere) is the collective term encompassing all weblogs or blogs as a community or social network. Many weblogs are densely interconnected; bloggers read others' blogs, link to them, reference them in their own writing, and post comments on each others' blogs. Because of this, the interconnected blogs have grown their own culture.

Blogosphere is an essential concept for blogs. Blogs themselves are just web formats, whereas the blogosphere is a social phenomenon. What really differentiates blogs from webpages, forums, or chatrooms is that blogs can be part of that shifting Internet-wide social network.

Like biological systems, the blogosphere demonstrates all the classic ecological patterns: predators and prey, evolution and emergence, natural selection and adaptation. The number of links obtained by a blog, is frequently related to the quality and quantity of information presented by that blog. That means, the most popular blogs have the highest link level, the worst blogs have the lowest link level. The blog ecosystem has its own selection and adaptation mechanism. The good tends to become better, the bad tends to disappear.

Through links and commentaries, the blogosphere with its self-perfecting mechanism, converts itself from a personal publishing system into a collaborative publishing system.

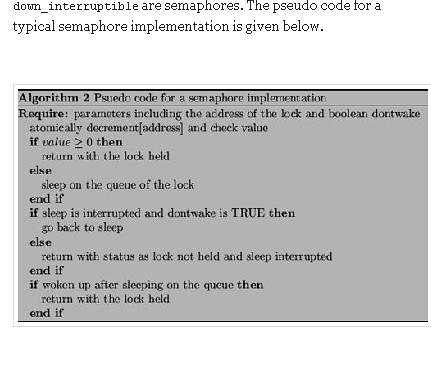

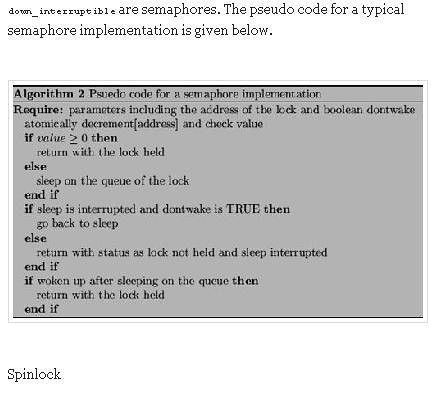

A semaphore is used to denote a locking primitive in which we relinquish the CPU if we do not get the lock. In the examples used in the previous article, down, down_write and down_interruptible are semaphores. The pseudo code for a typical semaphore implementation is given below.

A spinlock is used in a multiprocessor environment. There might be cases when one cannot go to sleep waiting for a lock. Consider for example an interrupt handler in a Symmetric Multi Processing environment (here after referred to as SMP). All the CPUs may receive an interrupt from any device. If a device interrupted the system twice, lets say one interrupt goes to CPU 1 and the other to CPU 2. Both of them execute the same interrupt handler for the device. They will need mutual exclusion to avoid the kind of races or unexpected results. It would be very bad for a CPU to go to sleep in an interrupt handler, because an interrupt handler is running at a very high priority, preempting everything else, we should deal with it quickly or the system will perform very badly. So what do we do, we spin on the lock being held by the other CPU (assuming that the other CPU will not hold the lock for long). Once we get it, we finish with the interrupt handler and continue (NOTE: The assumption here is that interrupt handlers run fast, otherwise the spinlock will spin for a long time).

Now that we have looked at kinds of locking primitives, let us discuss when we need to lock data. First of all remember that we need to lock only global variables and structures, since only they are prone to races. Local variables reside on the stack and since each process on each processor has its own stack, there are no race conditions with local variables. We will consider the following cases

Surprisingly enough, there are only two small rules for locking. These rules are definitely not exhaustive, they are rules of thumb and form the basis of this article. From these rules we will draw more and try to use real world examples to illustrate the various rules. Lets now see what each rule means.

/* Find the cache in the chain of caches. */

down(&cache_chain_sem);

/* the chain is never empty, cache_cache

is never destroyed */

if (clock_searchp == cachep)

clock_searchp =

list_entry(cachep->next.next,

kmem_cache_t, next);

list_del(&cachep->next);

up(&cache_chain_sem);

if (__kmem_cache_shrink(cachep)) {

printk(KERN_ERR "kmem_cache_destroy:

Can't free all objects %p\n", cachep);

down(&cache_chain_sem);

list_add(&cachep->next,&cache_chain);

up(&cache_chain_sem);

return 1;

}

In the example above, we grab the cache_chain_sem lock twice. We release the lock before calling __kmem_cache_shrink() and grab it again if necessary i.e, if __kmem_cache_shrink() returns a value greater than zero. We could have held the lock for the entire duration and freed it at the end, but it would conflict with the rules we stated above.

We would be protecting code and not data, we need to protect the cachep list, so we use the lock only to protect the contents of that list from changing. What if we held the lock and __kmem_cache_shrink() turned out to be an extremely long function? Other routines waiting for that lock would really starve, especially if __kmem_cache_shrink() does not change the cachep list. It would even be unfair to hold the lock and make merry while others are waiting for the lock.

This brings us to some important questions

These questions are answered in the articles to follow.

Some of the policy from the app automation refers to https://rclone.org/privacy/ if you are a general blog reader, follow Google's polic...